Imperfect #10: Can AI replace me?

AI is changing software engineering. But can it replace a manager?

AI tools are changing the game for software engineering. But what about engineering management? Can AI replace a manager?

AI just got all creative on us

Like many, I expected the “human” side of life to be the safest from AI. Surely, I thought, AI can’t create art, can’t make a joke, can’t reason about the high level aspects of a complex software system.

For decades, software has been eating up the mechanical side of the spectrum. Programming languages at ever higher levels of abstraction made programming more and more like speaking. Programming frameworks letting us think more about the problems and less about code. IDEs with snippets, autocomplete, auto refactoring, documentation pop-ups, automating more and more of the typing itself. Meanwhile, advancements in robotics are replacing more and more factory and warehouse workers. Delivery drones and self driving cars threaten the employability of everyone who drives for a living.

But white collar workers have been mostly safe from the encroachment of automation. We have been telling computers what to do, not the other way around. Digital art, copywriting, teaching, managing, have been solely the job of humans because computers cannot _create_. They can’t solve new problems or generate new knowledge.

And yet… here we are. We now have hundreds of tools capable of generating digital art, and computers can explain the humor in a photo. ChatGPT and Phind can pass coding interviews and happily design a highly scalable software system.

LLMs as digital greybeards

As it turns out, computers never needed to create anything in order to solve a frightening number of real world problems. As long as they can understand the semantics of their vast data stores, map how that data fits a question they’re asked, and reword and summarise that knowledge to answer the question, they can do what it previously took a human to do.

I believe us humans often solve problems like a large language model does: by finding known answers to similar problems in our memory and stitching them together into a coherent solution that is likely to work. That’s it, 95% of the time. That’s why experienced people are generally more effective than inexperienced people. Aside: I believe that’s also why clever but inexperienced people can succeed in disruptive scenarios, where things change so fundamentally that past experience can actually hurt.

A word on hallucinating, which is when the AI makes up things that are wrong but sound credible: we humans do it too, a lot. In practical terms that’s a problem, but it’s fascinating to consider how well that behavior mimics real humans’.

The LEGO Manager

Most problems we face on daily basis are not fundamentally new. They might be strictly unique in the sense that the combination of the problem plus the environment variables is unique, yes. But break it all apart and we’re usually left with a puzzle which might be unique, but it’s made of pieces we’ve seen before.

Much like a LEGO set that lets you build infinite things out of the same bricks, can engineering management be distilled down to a finite set of skills and techniques? If so, can the correct combination to solve a problem not be determined by situational analysis and an LLM-like cross between a good, contextualised, problem statement, and a knowledge base?

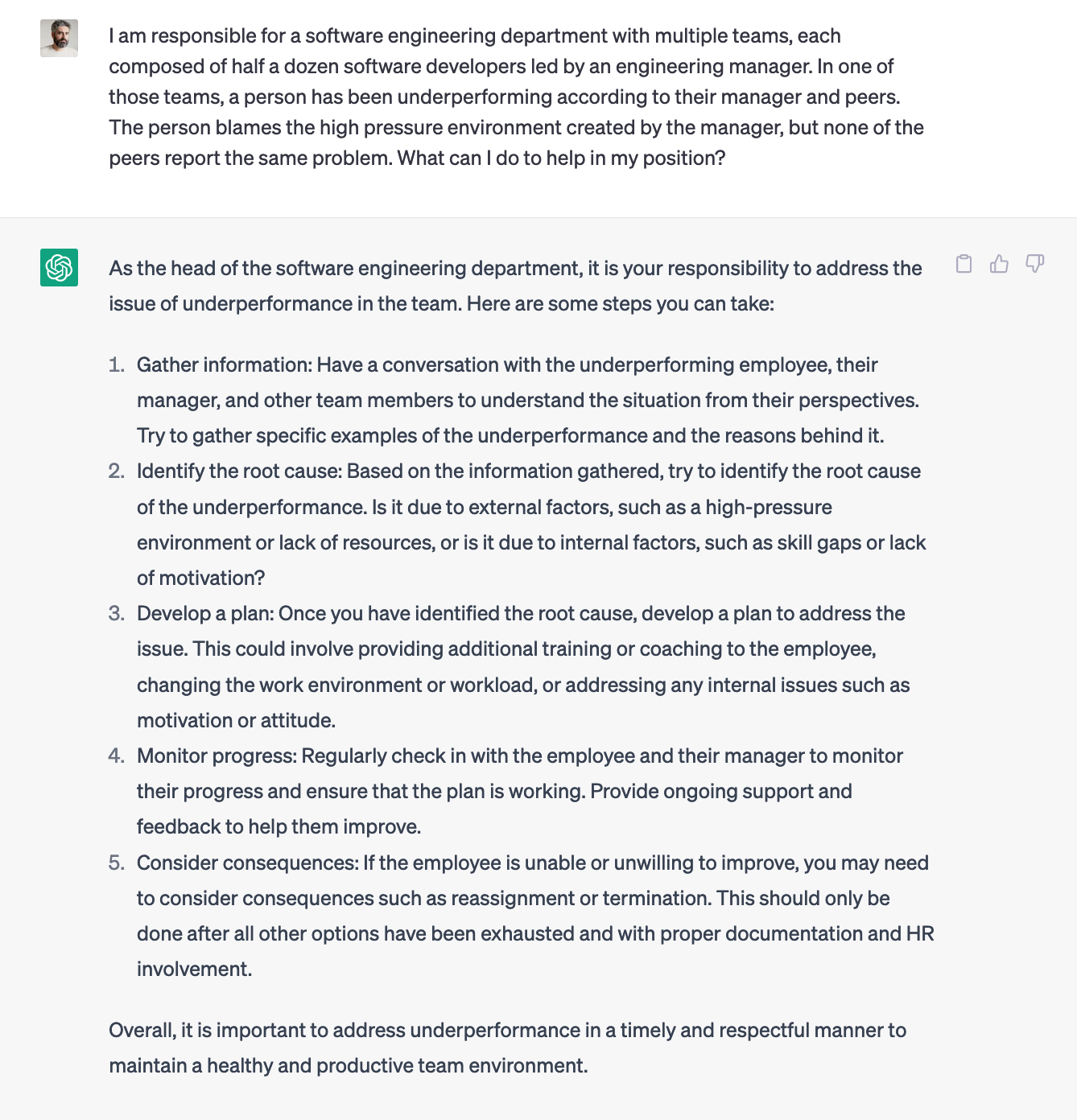

I believe the answer is yes. Consider the below conversation with ChatGPT on the classic subject of engineer underperformance:

It gave me a list of things to try in a sequence that makes sense. Talk to the people involved, gather information, validate whether or not there’s an issue and how deep it is, formulate a plan, monitor progress and plan contingencies. Sure, it doesn’t go very deep into any of the points, and different people might have different approaches, but it looks like a very sensible general plan of action.

In essence, what the machine did was compile all of its knowledge of engineer performance management, sourced from everything that’s been written on the internet about the topic, into a bite-sized blog post just for me. It grabbed a few bricks from its big bag of LEGO and put something together. Assuming the source material is good, the output should be decent too.

I could take this further and ask for detailed instructions on each step. Or I could challenge the answer and ask for a different approach. If I ask for an aggressive, micromanaged plan or for a hands-off indirect tactic, I’ll get exactly that. ChatGPT doesn’t know what’s the best answer, but it can guess based on its knowledge and it can change direction if you tell it you’re looking for something specific.

Is that so different from what we do?

The sobering thing here is that a great many team leads and EMs I’ve known over the years could not have put together such a clear, concise plan of action. I myself would probably have to sit down for a bit, clear my head, look at the situation, review known interactions between the people involved, check the project management and git repo tools, get interrupted half a dozen times along the way, and debate internally over some coffee whether to bring this up in my next 1:1 with the manager or have a quick huddle right now. Meanwhile the AI gave me a sensible plan in 10 seconds.

So can AI replace me?

Betteridge’s law of headline holds. My answer is no. Not today. But I no longer think the territory of management is out of bounds for AI. For any problem for which there is a known good answer, I think we’re at a point where AI can be for managers what StackOverflow is for developers. A trove of knowledge to be exploited.

In the near future, though, all bets are off. Anything that can be boiled down to a system and given a programmable interface is definitely going to have AI sprinkled all over it. Think of an HR system with a performance evaluation component. How far off do you think we are from that system suggesting improvement steps for underperforming employees, based on their scores in the system and enhanced by knowledge of all of the employees non-private slack messages, pull request comments, wiki pages, etc?

I wouldn’t be surprised if just such a thing already existed and I’m just not aware of it. If it doesn’t, it will soon.

Soft skills

I’ve focused exclusively on hard skills here. Situational analysis and problem solving. What AI can’t do yet, is create. What it probably won’t ever be able to, is relate. As managers, our ability to adapt and improvise is crucial. Our ability to care about others is paramount. I’m leaving those aspects out of today’s article for the sake of brevity, but I believe there’s a possible future where human managers really focus on that side and lean more and more on AI to quickly solve the more repeatable, better understood problems.

I hope this means that bad, impersonal managers have their days numbered.

Conclusion

Large Language Models work surprisingly similarly to humans, at least at a high level. Gathering lots and lots of data, finding the best-fitting answers to problems, and contextualising things as much as possible. They can’t generate new knowledge yet, but then again, most problems we see day to day are not new either.

An AI doesn’t understand emotion, doesn’t know what to do when it encounters a new problem, so it can’t and probably won’t ever be able to, replace a human manager.

But AI’s ability to make sense of large volumes of data, finding known solutions to known problems, effectively bringing the combined knowledge of all humanity to bear on Bob’s Q2 underperformance, is a great tool that we can use today to make ourselves more efficient.